import csvf = open('myclone.csv', 'rb')reader = csv.reader(f)

headers = reader.next()

column = {}

for h in headers:

column[h] = []

for row in reader:

for h, v in zip(headers, row):

column[h].append(v)

A blog about the use of educational technology in language learning and teaching

import csvf = open('myclone.csv', 'rb')reader = csv.reader(f)

headers = reader.next()

column = {}

for h in headers:

column[h] = []

for row in reader:

for h, v in zip(headers, row):

column[h].append(v)

from PIL import Image im = Image.open("bride.jpg") im.rotate(45).show()

from PIL import Image import glob, os size = 128, 128 for infile in glob.glob("*.jpg"): file, ext = os.path.splitext(infile) im = Image.open(infile) im.thumbnail(size, Image.ANTIALIAS) im.save(file + ".thumbnail", "JPEG")

from PIL import Image im = Image.new("RGB", (512, 512), "white")

from PIL import Image im = Image.open("lenna.jpg")

from PIL import image from StringIO import StringIO # read data from string im = Image.open(StringIO(data))

out = image1 * (1.0 - alpha) + image2 * alphaIf the alpha is 0.0, a copy of the first image is returned. If the alpha is 1.0, a copy of the second image is returned. There are no restrictions on the alpha value. If necessary, the result is clipped to fit into the allowed output range.

im = Image.frombuffer(mode, size, data, "raw", mode, 0, 1)

Image.frombuffer(mode, size, data, decoder, parameters)

⇒ imageL = R * 299/1000 + G * 587/1000 + B * 114/1000When converting to a bilevel image (mode “1”), the source image is first converted to black and white. Resulting values larger than 127 are then set to white, and the image is dithered. To use other thresholds, use the point method. To disable dithering, use the dither= option (see below).

rgb2xyz = (

0.412453, 0.357580, 0.180423, 0,

0.212671, 0.715160, 0.072169, 0,

0.019334, 0.119193, 0.950227, 0 )

out = im.convert("RGB", rgb2xyz)

pix = im.load()

print pix[x, y]

pix[x, y] = value

argument * scale + offsetExample:

out = im.point(lambda i: i * 1.2 + 10)

You can leave out either the scale or the offset.pixel = value * scale + offsetIf the scale is omitted, it defaults to 1.0. If the offset is omitted, it defaults to 0.0.

im.load()

putpixel = im.im.putpixel

for i in range(n):

...

putpixel((x, y), value)

In 1.1.6, the above is better written as: pix = im.load()

for i in range(n):

...

pix[x, y] = value

out = im.convert("P", palette=Image.ADAPTIVE, colors=256)

1) f = open( "rockyou.txt", "r" )for line in f:print line

2) with open("rockyou.txt", 'r') as f:

for line in f:

print line

public static Boolean validateEmail(String email) {

String EMAIL_PATTERN = "^[_A-Za-z0-9-\\+]+(\\.[_A-Za-z0-9-]+)*@"

+ "[A-Za-z0-9-]+(\\.[A-Za-z0-9]+)*(\\.[A-Za-z]{2,})$";

Pattern pattern = Pattern.compile(EMAIL_PATTERN);

Matcher matcher = pattern.matcher(email);

return matcher.matches();

}

/**

* JSON object input stream.

*

* @param in

* the in

* @return the JSON object

* @throws IOException

* Signals that an I/O exception has occurred.

*/

public static JSONObject jsonObjectInputStream(InputStream in)

throws IOException {

String line;

BufferedReader br = new BufferedReader(new InputStreamReader(in,

Charset.forName("UTF-8")));

JSONObject json = new JSONObject();

while ((line = br.readLine()) != null) {

try {

json = new JSONObject(line);

} catch (JSONException e) {

return null;

}

}

return json;

}

String login = "ACCOUNT_SID" + ":" + "AUTH_TOKEN";

String base64login = new String(Base64.encodeBase64(login.getBytes()));

String phoneNumber = "+14222456789";

String messageText = "Hello, World!";

try {

Response response = Jsoup

.connect(

"https://api.twilio.com/2010-04-01/Accounts/{ACCOUNT_SID}/Messages.json")

.header("Authorization", "Basic " + base64login)

.timeout(10000).method(Method.POST).data("To", phoneNumber)

.data("From", "+14211456789").data("Body", messageText)

.execute();

if (response.statusCode() == 201) {

System.out.println("send message");

} else {

System.out.println("Message Send Failure");

}

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

public static int getAge(long dateOfBirth) {

Calendar today = Calendar.getInstance();

Calendar birthDate = Calendar.getInstance();

int age = 0;

birthDate.setTimeInMillis(dateOfBirth);

if (birthDate.after(today)) {

return -1;

}

age = today.get(Calendar.YEAR) - birthDate.get(Calendar.YEAR);

// If birth date is greater than todays date (after 2 days adjustment of

// leap year) then decrement age one year

if ((birthDate.get(Calendar.DAY_OF_YEAR)

- today.get(Calendar.DAY_OF_YEAR) > 3)

|| (birthDate.get(Calendar.MONTH) > today.get(Calendar.MONTH))) {

age--;

// If birth date and todays date are of same month and birth day of

// month is greater than todays day of month then decrement age

} else if ((birthDate.get(Calendar.MONTH) == today.get(Calendar.MONTH))

&& (birthDate.get(Calendar.DAY_OF_MONTH) > today

.get(Calendar.DAY_OF_MONTH))) {

age--;

}

return age;

}

/**

* Convert list string to bytes array.

*

* @param listString

* the list string

* @return the byte[]

* @throws IOException

* Signals that an I/O exception has occurred.

*/

public static byte[] convertListStringToBytesArray(List<String> listString)

throws IOException {

ByteArrayOutputStream baos = new ByteArrayOutputStream();

DeflaterOutputStream out = new DeflaterOutputStream(baos);

DataOutputStream dos = new DataOutputStream(out);

for (String i : listString) {

dos.writeUTF(i);

;

}

dos.close();

out.close();

baos.close();

return baos.toByteArray();

}

/**

* Convert bytes array to list string.

*

* @param byteArrays

* the byte arrays

* @return the list

* @throws IOException

* Signals that an I/O exception has occurred.

*/

public static List<String> convertBytesArrayToListString(byte[] byteArrays)

throws IOException {

ByteArrayInputStream bais = new ByteArrayInputStream(byteArrays);

InflaterInputStream iis = new InflaterInputStream(bais);

DataInputStream dins = new DataInputStream(iis);

List<String> listRs = new ArrayList<String>();

while (true) {

try {

String value = dins.readUTF();

listRs.add(value);

} catch (EOFException e) {

break;

}

}

dins.close();

iis.close();

bais.close();

return listRs;

}

String hostName = "localhost";

try {

InetAddress addr = InetAddress.getLocalHost();

String suggestedName = addr.getCanonicalHostName();

// Rough test for IP address, if IP address assume a local lookup

// on VPN

if (!suggestedName.matches("(\\d{1,3}\\.?){4}") && !suggestedName.contains(":")) {

hostName = suggestedName;

}

} catch (UnknownHostException ex) {

}

System.out.println(hostName);

The problem comes is that we have to trust the local machine settings,

for example /etc/hostname, which can result in a network name that is

not accessible from another machine. To counter this I wrote the

following code to work over the available network interfaces to find a

remotely addressable network address name that can be used to talk back

to this machine. (I could use an IP address but they are harder to

remember, particularly as we are moving towards IPv6)

String hostName = stream(wrap(NetworkInterface::getNetworkInterfaces).get())

// Only alllow interfaces that are functioning

.filter(wrap(NetworkInterface::isUp))

// Flat map to any bound addresses

.flatMap(n -> stream(n.getInetAddresses()))

// Fiter out any local addresses

.filter(ia -> !ia.isAnyLocalAddress() && !ia.isLinkLocalAddress() && !ia.isLoopbackAddress())

// Map to a name

.map(InetAddress::getCanonicalHostName)

// Ignore if we just got an IP back

.filter(suggestedName -> !suggestedName.matches("(\\d{1,3}\\.?){4}")

&& !suggestedName.contains(":"))

.findFirst()

// In my case default to localhost

.orElse("localhost");

System.out.println(hostName);

You might notice there a are a few support methods being used in there

to tidy up the code, here are the required support methods if you are

interested.

@FunctionalInterface

public interface ThrowingPredicate<T, E extends Exception>{

boolean test(T t) throws E;

}

@FunctionalInterface

public interface ThrowingSupplier<T, E extends Exception>{

T get() throws E;

}

public static <T, E extends Exception> Predicate<T> wrap(ThrowingPredicate<T, E> th) {

return t -> {

try {

return th.test(t);

} catch (Exception ex) {

throw new RuntimeException(ex);

}

};

}

public static <T, E extends Exception> Supplier<T> wrap(ThrowingSupplier<T, E> th) {

return () -> {

try {

return th.get();

} catch (Exception ex) {

throw new RuntimeException(ex);

}

};

}

// http://stackoverflow.com/a/23276455

public static <T> Stream<T> stream(Enumeration<T> e) {

return StreamSupport.stream(

Spliterators.spliteratorUnknownSize(

new Iterator<T>() {

public T next() {

return e.nextElement();

}

public boolean hasNext() {

return e.hasMoreElements();

}

},

Spliterator.ORDERED), false);

}

Reference: Getting a name for someone to connect back to your server from our JCG partner Gerard Davison at the Gerard Davison’s blog blog.

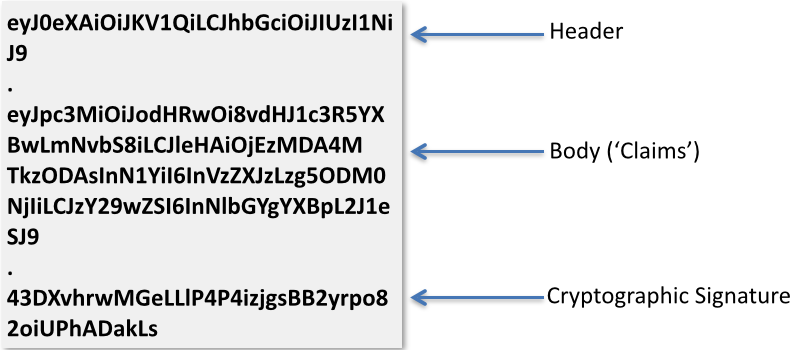

authentication and token in this context.If you look carefully, you can see that there are two periods in the string. These are significant as they delimit different sections of the JWT.eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJodHRwOi8vdHJ1c3R5YXBwLmNvbS8iLCJleHAiOjEzMDA4MTkzODAsInN1YiI6InVzZXJzLzg5ODM0NjIiLCJzY29wZSI6InNlbGYgYXBpL2J1eSJ9.43DXvhrwMGeLLlP4P4izjgsBB2yrpo82oiUPhADakLs

eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9 . eyJpc3MiOiJodHRwOi8vdHJ1c3R5YXBwLmNvbS8iLCJleHAiOjEzMDA4MTkzODAsInN1YiI6InVzZXJzLzg5ODM0NjIiLCJzY29wZSI6InNlbGYgYXBpL2J1eSJ9 . 43DXvhrwMGeLLlP4P4izjgsBB2yrpo82oiUPhADakLs

{

"typ": "JWT",

"alg": "HS256"

}

Claims

{

"iss":"http://trustyapp.com/",

"exp": 1300819380,

"sub": "users/8983462",

"scope": "self api/buy"

}

Cryptographic Signaturetß´—™à%O˜v+nî…SZu¯µ€U…8H×

iss is who issued the token.exp is when the token expires.sub is the subject of the token. This is usually a user identifier of some sort.scope

is not included in the specification, but it is commonly used to

provide authorization information. That is, what parts of the

application the user has access to.scope

above. Another advantage is that the client can now react to this

information without any further interaction with the server. For

instance, a portion of the page may be hidden based on the data found in

thescope claim.subclaim. When a JWT is signed, it’s referred to as a JWS. When it’s encrypted, it’s referred to as a JWE.

import io.jsonwebtoken.Jwts;

import io.jsonwebtoken.SignatureAlgorithm;

byte[] key = getSignatureKey();

String jwt =

Jwts.builder().setIssuer("http://trustyapp.com/")

.setSubject("users/1300819380")

.setExpiration(expirationDate)

.put("scope", "self api/buy")

.signWith(SignatureAlgorithm.HS256,key)

.compact();

compact call which returns the final JWT string..setSubject("users/1300819380"). When a custom claim is set, we use a call to put and specify both the key and value. For example: .put("scope", "self api/buy")

String subject = "HACKER";

try {

Jws jwtClaims =

Jwts.parser().setSigningKey(key).parseClaimsJws(jwt);

subject = claims.getBody().getSubject();

//OK, we can trust this JWT

} catch (SignatureException e) {

//don't trust the JWT!

}

SignatureExceptionand the value of the subject variable will stay HACKER. If it’s a valid JWT, then subject will be extracted from it: claims.getBody().getSubject()POST /oauth/token HTTP/1.1 Origin: https://foo.com Content-Type: application/x-www-form-urlencoded grant_type=password&username=username&password=password

grant_type is required. The application/x-www-form-urlencoded

content type is required for this type of interaction as well. Given

that you are passing the username and password over the wire, you would always

want the connection to be secure. The good thing, however, is that the

response will have an OAuth2 bearer token. This token will then be used

for every interaction between the browser and server going forward.

There is a very brief exposure here where the username and password are

passed over the wire. Assuming the authentication service on the server

verifies the username and password, here’s the response:

HTTP/1.1 200 OK

Content-Type: application/json;charset=UTF-8

Cache-Control: no-store

Pragma: no-cache

{

"access_token":"2YotnFZFEjr1zCsicMWpAA...",

"token_type":"example",

"expires_in":3600,

"refresh_token":"tGzv3JOkF0XG5Qx2TlKWIA...",

"example_parameter":"example_value"

}

Notice the Cache-Control and Pragma headers. We don’t want this response being cached anywhere. The access_token

is what will be used by the browser in subsequent requests. Again,

there is not direct relationship between OAuth2 and JWT. However, the access_token

can be a JWT. That’s where the extra benefit of the encoded meta-data

comes in. Here’s how the access token is leveraged in future requests:TheGET /admin HTTP/1.1 Authorization: Bearer 2YotnFZFEjr1zCsicMW...

Authorization header is a standard header. No custom headers are required to use OAuth2. Rather than the type being Basic, in this case the type is Bearer. The access token is included directly after the Bearer

keyword. This completes the OAuth2 interaction for the password grant

type. Every subsequent request from the browser can use the Authorizaion: Bearer header with the access token.client_credentials which uses client_id andclient_secret, rather than username and password.

This grant type is typically used for API interactions. While the

client id and slient secret function similarly to a username and

password, they are usually of a higher quality security and not

necessarily human readable.

@RestController

public class HelloController {

@RequestMapping("/")

String home(HttpServletRequest request) {

String name = "World";

Account account = AccountResolver.INSTANCE.getAccount(request);

if (account != null) {

name = account.getGivenName();

}

return "Hello " + name + "!";

}

}

Account account = AccountResolver.INSTANCE.getAccount(request);account will resolve to an Account object (and not be null) ONLY if an authenticated session is present.☺ dogeared jobs:0 ~/Projects/StormPath/stormpath-sdk-java (master|8100m) ➥ cd examples/spring-boot-webmvc/ ☺ dogeared jobs:0 ~/Projects/StormPath/stormpath-sdk-java/examples/spring-boot-webmvc (master|8100m) ➥ mvn clean package [INFO] Scanning for projects... [INFO] [INFO] ------------------------------------------------------------------------ [INFO] Building Stormpath Java SDK :: Examples :: Spring Boot Webapp 1.0.RC4.6-SNAPSHOT [INFO] ------------------------------------------------------------------------ ... skipped output ... [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 4.865 s [INFO] Finished at: 2015-08-04T11:46:05-04:00 [INFO] Final Memory: 31M/224M [INFO] ------------------------------------------------------------------------ ☺ dogeared jobs:0 ~/Projects/StormPath/stormpath-sdk-java/examples/spring-boot-webmvc (master|8100m

☺ dogeared jobs:0 ~/Projects/StormPath/stormpath-sdk-java/examples/spring-boot-webmvc (master|8104m) ➥ java -jar target/stormpath-sdk-examples-spring-boot-web-1.0.RC4.6-SNAPSHOT.jar . ____ _ __ _ _ /\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \ ( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \ \\/ ___)| |_)| | | | | || (_| | ) ) ) ) ' |____| .__|_| |_|_| |_\__, | / / / / =========|_|==============|___/=/_/_/_/ :: Spring Boot :: (v1.2.1.RELEASE) 2015-08-04 11:51:00.127 INFO 17973 --- [ main] tutorial.Application : Starting Application v1.0.RC4.6-SNAPSHOT on MacBook-Pro.local with PID 17973 ... skipped output ... 2015-08-04 11:51:04.558 INFO 17973 --- [ main] s.b.c.e.t.TomcatEmbeddedServletContainer : Tomcat started on port(s): 8080 (http) 2015-08-04 11:51:04.559 INFO 17973 --- [ main] tutorial.Application : Started Application in 4.599 seconds (JVM running for 5.103)

~/.stormpath/apiKey.properties. Look here for more information on quick setup up of Stormpath with Spring Boot.➥ http -v localhost:8080 GET / HTTP/1.1 Accept: */* Accept-Encoding: gzip, deflate Connection: keep-alive Host: localhost:8080 User-Agent: HTTPie/0.9.2 HTTP/1.1 200 OK Accept-Charset: big5, big5-hkscs, cesu-8, euc-jp, euc-kr, gb18030, ... Content-Length: 12 Content-Type: text/plain;charset=UTF-8 Date: Tue, 04 Aug 2015 15:56:41 GMT Server: Apache-Coyote/1.1 Hello World!

-v parameter produces verbose output and shows all

the headers for the request and the response. In this case, the output

message is simply: Hello World!. This is because there is not an established session.oauth endpoint so that our server can authenticate with Stormpath. You may ask, “What oauth

endpoint?” The controller above doesn’t indicate any such endpoint. Are

there other controllers with other endpoints in the example? No, there

are not! Stormpath gives you oauth (and many other) endpoints right

out-of-the-box. Check it out:

➥ http -v --form POST http://localhost:8080/oauth/token \

> 'Origin:http://localhost:8080' \

> grant_type=password username=micah+demo.jsmith@stormpath.com password=

POST /oauth/token HTTP/1.1

Content-Type: application/x-www-form-urlencoded; charset=utf-8

Host: localhost:8080

Origin: http://localhost:8080

User-Agent: HTTPie/0.9.2

grant_type=password&username=micah%2Bdemo.jsmith%40stormpath.com&password=

HTTP/1.1 200 OK

Cache-Control: no-store

Content-Length: 325

Content-Type: application/json;charset=UTF-8

Date: Tue, 04 Aug 2015 16:02:08 GMT

Pragma: no-cache

Server: Apache-Coyote/1.1

Set-Cookie: account=eyJhbGciOiJIUzI1NiJ9.eyJqdGkiOiIxNDQyNmQxMy1mNThiLTRhNDEtYmVkZS0wYjM0M2ZjZDFhYzAiLCJpYXQiOjE0Mzg3MDQxMjgsInN1YiI6Imh0dHBzOi8vYXBpLnN0b3JtcGF0aC5jb20vdjEvYWNjb3VudHMvNW9NNFdJM1A0eEl3cDRXaURiUmo4MCIsImV4cCI6MTQzODk2MzMyOH0.wcXrS5yGtUoewAKqoqL5JhIQ109s1FMNopL_50HR_t4; Expires=Wed, 05-Aug-2015 16:02:08 GMT; Path=/; HttpOnly

{

"access_token": "eyJhbGciOiJIUzI1NiJ9.eyJqdGkiOiIxNDQyNmQxMy1mNThiLTRhNDEtYmVkZS0wYjM0M2ZjZDFhYzAiLCJpYXQiOjE0Mzg3MDQxMjgsInN1YiI6Imh0dHBzOi8vYXBpLnN0b3JtcGF0aC5jb20vdjEvYWNjb3VudHMvNW9NNFdJM1A0eEl3cDRXaURiUmo4MCIsImV4cCI6MTQzODk2MzMyOH0.wcXrS5yGtUoewAKqoqL5JhIQ109s1FMNopL_50HR_t4",

"expires_in": 259200,

"token_type": "Bearer"

}

httpie that I want to make a form url-encoded POST – that’s what the --form and POST parameters do. I am hitting the /oauth/token endpoint of my locally running server. I specify an Origin header. This is required to interact with Stormpath for the security reasons we talked about previously. As per the OAuth2 spec, I am passing up grant_type=password along with ausername and password.Set-Cookie

header as well as a JSON body containing the OAuth2 access token. And

guess what? That access token is also a JWT. Here are the claims

decoded:

{

"jti": "14426d13-f58b-4a41-bede-0b343fcd1ac0",

"iat": 1438704128,

"sub": "https://api.stormpath.com/v1/accounts/5oM4WI3P4xIwp4WiDbRj80",

"exp": 1438963328

}

Notice the sub key. That’s the full Stormpath URL to the

account I authenticated as. Now, let’s hit our basic Hello World

endpoint again, only this time, we will use the OAuth2 access token:Notice on the last line of the output that the message addresses us by name. Now that we’ve established an authenticated session with Stormpath using OAuth2, these lines in the controller retrieve the first name:➥ http -v localhost:8080 \ > 'Authorization: Bearer eyJhbGciOiJIUzI1NiJ9.eyJqdGkiOiIxNDQyNmQxMy1mNThiLTRhNDEtYmVkZS0wYjM0M2ZjZDFhYzAiLCJpYXQiOjE0Mzg3MDQxMjgsInN1YiI6Imh0dHBzOi8vYXBpLnN0b3JtcGF0aC5jb20vdjEvYWNjb3VudHMvNW9NNFdJM1A0eEl3cDRXaURiUmo4MCIsImV4cCI6MTQzODk2MzMyOH0.wcXrS5yGtUoewAKqoqL5JhIQ109s1FMNopL_50HR_t4' GET / HTTP/1.1 Authorization: Bearer eyJhbGciOiJIUzI1NiJ9.eyJqdGkiOiIxNDQyNmQxMy1mNThiLTRhNDEtYmVkZS0wYjM0M2ZjZDFhYzAiLCJpYXQiOjE0Mzg3MDQxMjgsInN1YiI6Imh0dHBzOi8vYXBpLnN0b3JtcGF0aC5jb20vdjEvYWNjb3VudHMvNW9NNFdJM1A0eEl3cDRXaURiUmo4MCIsImV4cCI6MTQzODk2MzMyOH0.wcXrS5yGtUoewAKqoqL5JhIQ109s1FMNopL_50HR_t4 Connection: keep-alive Host: localhost:8080 User-Agent: HTTPie/0.9.2 HTTP/1.1 200 OK Content-Length: 11 Content-Type: text/plain;charset=UTF-8 Date: Tue, 04 Aug 2015 16:44:28 GMT Server: Apache-Coyote/1.1 Hello John!

Account account = AccountResolver.INSTANCE.getAccount(request);

if (account != null) {

name = account.getGivenName();

}

"com.wordnik" %% "swagger-play2" % "1.3.12" exclude("org.reflections", "reflections"),

"org.reflections" % "reflections" % "0.9.8" notTransitive (),

"org.webjars" % "swagger-ui" % "2.1.8-M1"

api.version="1.0" swagger.api.basepath="http://localhost:9000"

GET / controllers.Assets.at(path="/public", file="index.html") GET /api-docs controllers.ApiHelpController.getResources POST /login controllers.SecurityController.login() POST /logout controllers.SecurityController.logout() GET /api-docs/api/todos controllers.ApiHelpController.getResource(path = "/api/todos") GET /todos controllers.TodoController.getAllTodos() POST /todos controllers.TodoController.createTodo() # Map static resources from the /public folder to the /assets URL path GET /assets/*file controllers.Assets.at(path="/public", file)

@Api(value = "/api/todos", description = "Operations with Todos") @Security.Authenticated(Secured.class) public class TodoController extends Controller {

@ApiOperation(value = "get All Todos", notes = "Returns List of all Todos", response = Todo.class, httpMethod = "GET") public static Result getAllTodos() { return ok(toJson(models.Todo.findByUser(SecurityController.getUser()))); } @ApiOperation( nickname = "createTodo", value = "Create Todo", notes = "Create Todo record", httpMethod = "POST", response = Todo.class ) @ApiImplicitParams( { @ApiImplicitParam( name = "body", dataType = "Todo", required = true, paramType = "body", value = "Todo" ) } ) @ApiResponses( value = { @com.wordnik.swagger.annotations.ApiResponse(code = 400, message = "Json Processing Exception") } ) public static Result createTodo() { Form<models.Todo> form = Form.form(models.Todo.class).bindFromRequest(); if (form.hasErrors()) { return badRequest(form.errorsAsJson()); } else { models.Todo todo = form.get(); todo.user = SecurityController.getUser(); todo.save(); return ok(toJson(todo)); } }

http://localhost:9000/assets/lib/swagger-ui/index.html?/url=http://localhost:9000/api-docs